projects

Magic Blocks: An LLM App That Creates LLM Apps

Written on 01 July 2024

I love ChatGPT.

I use it every day. Multiple times a day.

But I also hate using ChatGPT. I hate that every new conversation starts from scratch. To get high-quality, personalized results, I would use prompts that are several paragraphs long and include multiple examples. Re-typing such optimized prompts every time is a pain. And there is no easy way to find a past conversation with my optimized prompt.

As I was trying to learn more about building with Large Language Models (LLMs), I decided to build an LLM app to solve my need. That's how Magic Blocks was born.

For my future self, I wrote this essay to record how I built it and what I learned.

What is Magic Blocks?

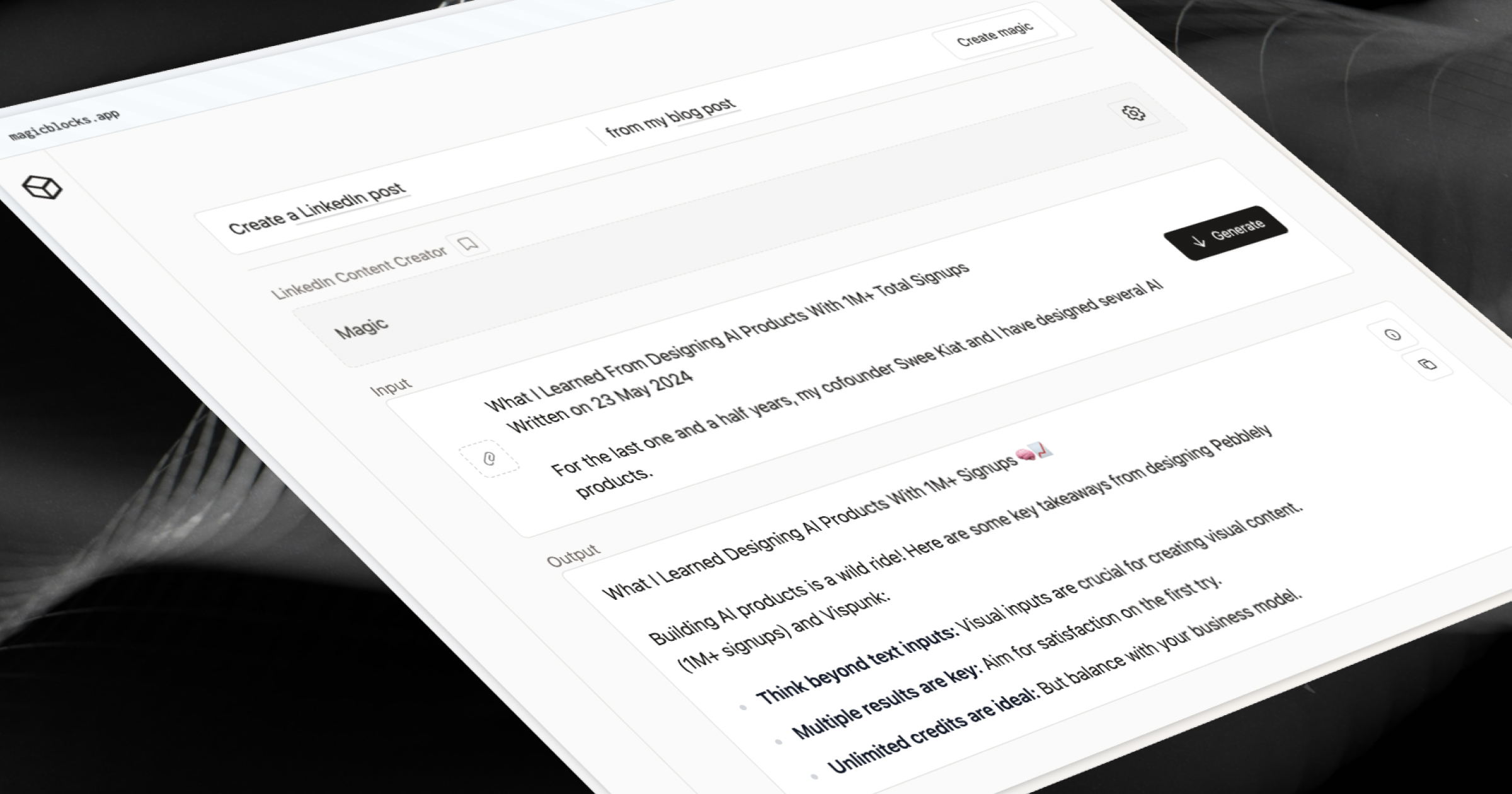

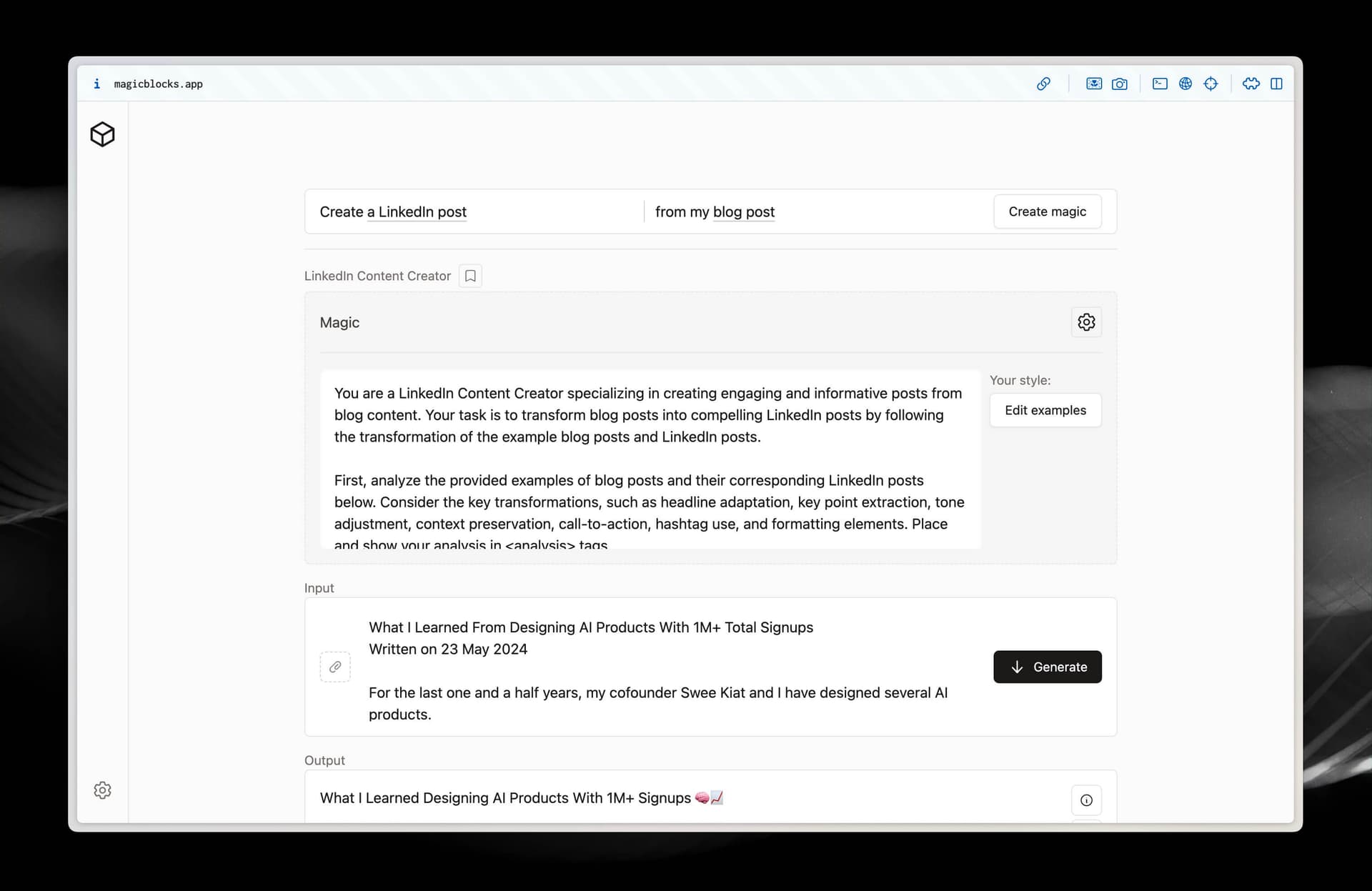

Magic Blocks is a little app that lets you generate magic blocks. You can use a magic block to then generate your desired outputs from your materials, such as a tweet from a blog post, an explainer video script from a scientific paper, or Mandarin text from English text. Most importantly, each magic block will learn your style from your provided input-output examples to give you personalized results. And, of course, you can save your favorite magic blocks to quickly reuse them in the future.

Here's how it works:

- State the content format you want to create from your original content (e.g. LinkedIn post from blog post)

- Get a generated magic block with an AI-crafted prompt (You can edit the prompt if you want.)

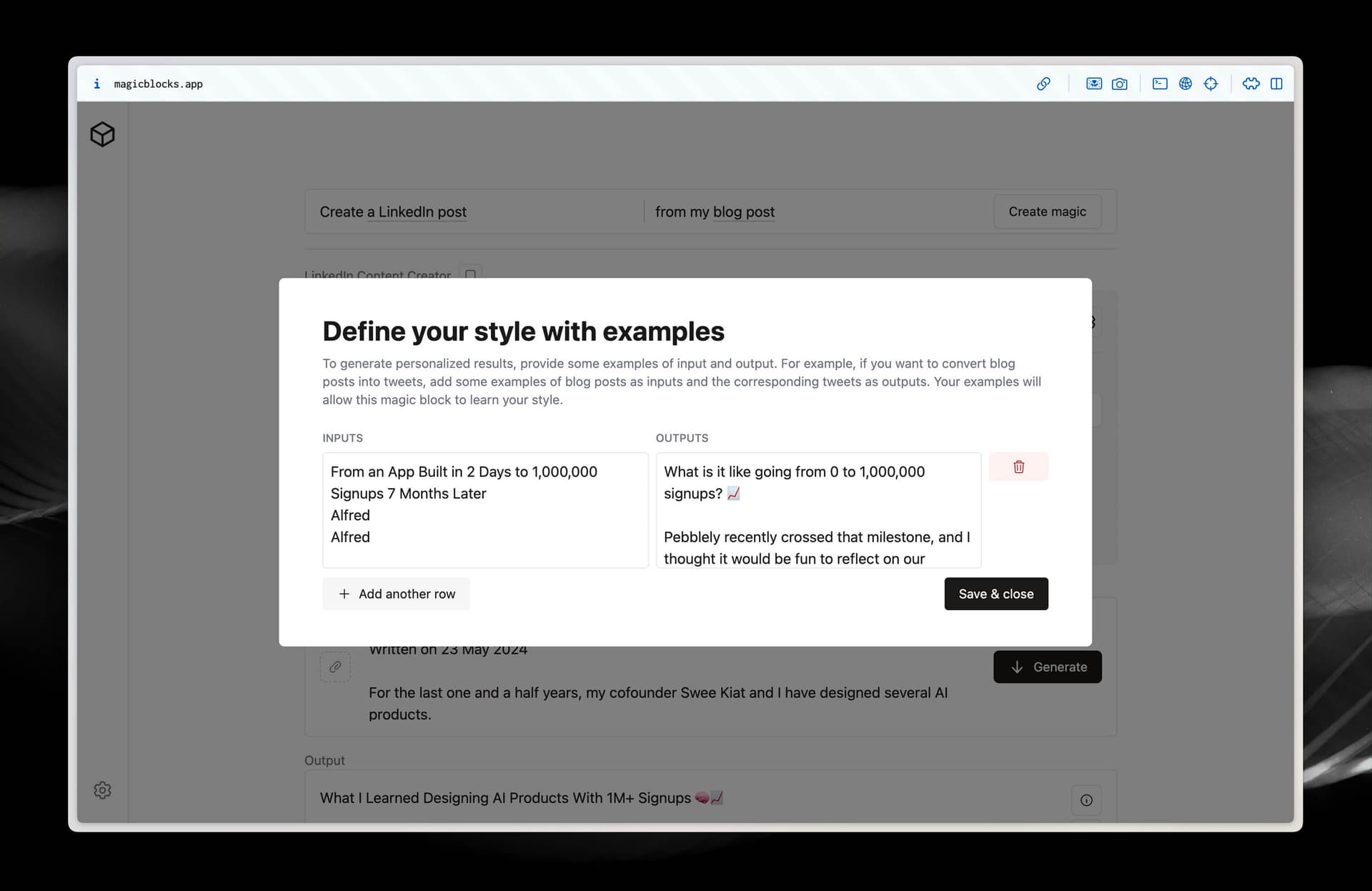

- Add some input-output examples to define your style (Ideally they should have the same style. Otherwise, keep to one input-output example.)

- Enter the content you want to transform

- Click "Generate" to transform your content into your desired format, based on your style

- Bonus: See how the transformation was done by clicking the information button

- Optional: Save the magic block for future use by clicking the bookmark button beside the title.

Part of the inspiration for Magic Blocks came from Every's Spiral. Spiral lets you automate repetitive writing tasks by transforming your content into tweets, summaries, and more. I wanted to see if I could extend the idea further to generate anything from anything. Financial analyses from PDF financial statements. Charts from sales data. Maybe even TikTok videos from YouTube videos. But each of these would require its specific prompt, and I cannot write the prompts in advance when I do not know all the ways people might use it. Neither would it be scalable. My solution was inspired by my cofounder Swee Kiat's Interformer project. Interformer lets you use an LLM to generate small apps. Magic Blocks lets you use an LLM to generate prompts for your specific tasks. An LLM app for creating LLM apps.

As a prototype, Magic Blocks can only accept and generate text now. The goal is to support documents, videos, and audio in the future (which shouldn't be too hard with the new multimodal APIs). It would also be awesome if you could chain magic blocks together, taking the output of one or multiple magic blocks as the input of another magic block.

You can try Magic Blocks for free (with your own OpenAI or Gemini API key)!

(I find OpenAI's GPT-4o gives better results but you have to pay for it. Google's Gemini 1.5 Flash is free and gives acceptable results.)

Tech stack

For the tech stack, I mostly picked tools that I wanted to get better at.

Frontend

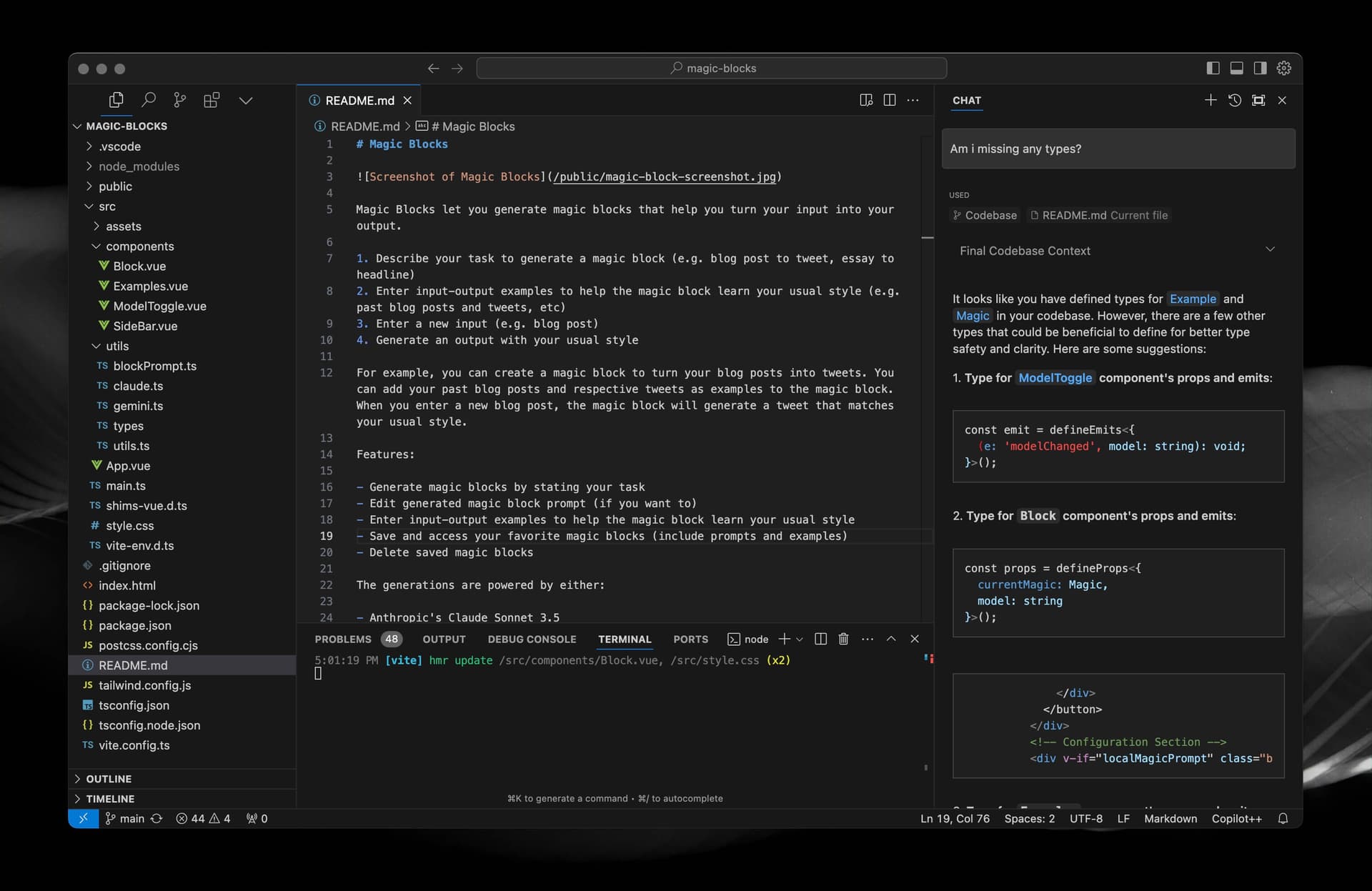

- Vue + Vite + TypeScript: I usually use React or Next.js but Swee Kiat is a fan of Vue and all our apps are built with Vue (and TypeScript). So I took this opportunity to improve my Vue and TypeScript. When I first started coding in Vue two years ago, I often got stuck and annoyed because the documentation is not as comprehensive as React. But with more practice and the help of Cursor (more below), I have started to like Vue.

- Tailwind: Using Tailwind for styling feels second nature now. I did not use any UI libraries because this is a fairly simple app. I'd love to use shadcn/ui for my future projects. I signed up for Emil Kowalski's Animation on the web course, wanting to include more animated interactions in my apps, but honestly haven't watched much to apply anything useful. I only included a simple spinning animation from Tailwind while the generations are being processed.

- Others: Heroicons for icons, Marked for parsing the markdown return by Google Gemini 1.5 Flash, and DOMPurify for sanitizing the HTML output from Marked.

Backend:

- OpenAI's GPT-4o and Google's Gemini 1.5 Flash: I created the app using Google's Gemini 1.5 Flash mostly because it has a generous free plan. While it's free and gave acceptable results, I got better with Anthropic's Claude Sonnet 3.5. But Anthropic doesn't allow calling their API from the browser (without a proxy server). In the end, I added OpenAI's GPT-4o.

- FastAPI: Initially, I took the opportunity to practice creating a server-side repo with FastAPI, which can more securely store my APIs. All our apps are using FastAPI, and I have also been working through Practical Deep Learning for Coders by Fast.ai. I don't have much experience with backend programming but FastAPI has been pretty straightforward to use. But eventually, I decided to let users use their own API keys and no longer needed a backend.

Other tools:

- AWS (S3, CloudFront, Amazon Certificate Manager): I have been keen to explore AWS (which we use for Pebblely but I don't access). I used to use Vercel (and still do for this blog). But I'm hesitant to use it for my products after seeing on Twitter that several builders received a hefty bill when their unoptimized apps got a spike in traffic. Because Vercel makes deployment a lot easier, I'm certain I would happily ship terrible, unoptimized code to production to my detriment. But honestly, AWS is so complicated that I likely made some mistakes too. That said, AWS has very generous free limits.

- GitKraken: I had a hard time understanding Git until Swee Kiat introduced me to GitKraken, which visualizes version control. I can't say I understand all the git commands well now but I'm much more comfortable with Git.

- Cursor: I'm going to talk about this next!

Building AI with AI

A fun aspect of building Magic Blocks is that I used AI to help me build it. Specifically, Cursor.

Before this, I used ChatGPT to help me with my coding problems. But it is hard to ask ChatGPT questions that span multiple files. And I don't want to keep copying and pasting my files into ChatGPT. I wanted something that is more native to my coding experience. GitHub Copilot first came to mind but I kept reading about Cursor on Twitter and decided to try it.

I love it.

A few thoughts and lessons learned:

- Building Magic Blocks took me about five days (first 80% took three days; the remaining 20% took two days). I don't think I would have coded that quickly without Cursor because I'm not exactly a professional coder.

- I have been using Cursor for two main things: Code recommendations and debugging. About 80% of the code suggestions from Cursor were good enough to use. Copilot++, which automatically recommends code, sped up my coding a lot. It even suggests the next line of code that needs updating and lets me jump there by pressing Tab and update the code with its suggestion by pressing Tab again. When I got stuck with a bug or have an issue across multiple files, I used the chat function. I also used the chat when I need help with terminal commands.

- One cool use case: I used it to improve my Typescript by asking "Am I missing any types anywhere?" and got appropriate suggestions for various files. But it didn't catch all missing typing.

- One frustrating lesson: I spent about two hours trying to fix an issue only to realize it was because Cursor suggested the wrong code for using Gemini API. It might have been because I was switching from Node.js to Python, and I somehow confused it. But shoutout to Cursor for recommending I add logging (the Python logging module), which eventually helped me identify the error in sufficient detail, which in turn led me to check the documentation.

I'm sure there are even better ways to use Cursor. If you have any tips, please share them with me.

Learning to prompt LLMs for better results

While I wanted to solve my complaints about ChatGPT, I also wanted to learn more about Large Language Models (LLMs) and how I can build better products powered by LLMs. Using LLMs regularly helps me understand LLMs but building with LLMs helps me learn more about, well, building with LLMs. I realized I spent as much time prompt engineering as coding the app.

Here are some things I learned along the way:

Quick reminder, first, there are two main prompts in Magic Blocks: Block prompt and magic prompt. The block prompt is for generating a magic block, and the magic prompt (generated by the block prompt) is for generating the transformed content.

1. Assign the LLM a role

This is a simple trick recommended by both OpenAI and Anthropic. According to Anthropic, "you can dramatically improve its performance by using the system parameter to give it a role."

I tried assigning the role at the system level and the user level but it didn't seem to make a significant difference. It might be because the difference would be more significant for a multiple-turn chat app where the system instructions would apply to all user inputs in a conversation but Magic Blocks is a single-input, single-output app. To keep my code simple, I assigned the role at the user level.

I started the prompt for generating magic blocks with:

You are an LLM-prompting expert. Your task is to generate Large-Language Model prompts for stated tasks.

For a given task, you create a prompt, known as a magic prompt, by first thinking about the key principles for the task and placing them in the <thoughts> tags.

Then come up with a title (no more than 5 words) for the assigned role and enclose it within <title> tags.

Finally, create the magic prompt and enclose it within <prompt> tags.

...

I had initially used "You are a block prompt creator" but I realized "block prompt creator" is not a real-world role and might not help the LLM understand what role it should take on. That said, both generated similar results. I suspect it's because my comprehensive prompt (at about 1,000 words) outweighs the effect of the role.

Also, the block prompt creates the magic prompt. Since the user's task must be specified in the block prompt to generate the magic prompt, I cannot assign a predefined role to the magic prompt. However, I can instruct the block prompt to generate a magic prompt with a role specific to the user's task:

...

Generally, here are the key principles:

1. Role assignment: Establish a specific role for the AI that's relevant to the task.

...

For example, when I generated a magic block for creating a headline from my essay, the prompt generated for the magic block was:

You are a headline generator specializing in crafting impactful titles from essays. Your task is to create a headline from an essay by following the transformation of the example essays and headlines.

2. Provide examples ("Show, not tell")

This is another prompting technique that is widely recommended (by OpenAI, Anthropic, Google, and industry practitioners). This is similar to asking anyone in real life for help, especially if we cannot describe exactly what we want. The more specific examples we can share, the better the other person can understand what we are looking for.

This is also especially helpful for getting results in a certain format. For instance, I want the generated magic prompt to be wrapped in <prompt> tags so that I can programmatically extract and put it in the newly created magic block.

I included three examples in my block prompt for generating magic blocks. Here's one of them:

...

<example>

<task>

Tweet from blog post

</task>

<thoughts>

To create an effective prompt for turning a blog post into a tweet according to the provided examples, I'll apply the following key principles:

1. Role assignment: Define a role that combines writing and social media expertise.

2. Clear task definition: Explicitly state the goal of transforming a blog post into a tweet.

3. Analysis instruction: Ask for an examination of examples to understand the transformation process.

4. Personalization: Emphasize following the analysis of the transformation process to create personalized results. Do not give generic Twitter recommendations.

</thoughts>

<title>Social Media Expert</title>

<prompt>

You are a social media expert specializing in concise content creation. Your task is to distill lengthy blog posts into tweets by following the transformation of the example blog posts and tweets.

First, analyze the provided examples of blog posts and their corresponding tweets below. Consider the key transformations, such as condensation, headline adaption, key point extraction, emoji use, teaser creation, tone adjustment, context preservation, call-to-action, and hashtag use. Place and show your analysis in <analysis> tags.

Then, based on your analysis, convert the blog post provided below into a tweet (no more than 280 characters) accordingly. Follow the key transformations as closely as possible; do not follow generic Twitter recommendations.

</prompt>

</example>

...

(I'll explain the <thoughts> tags in the next point.)

Each magic block also requires the user to provide at least one example. This allows the magic block to "learn from the users' examples" (i.e. in-context learning or few-shot prompting) to generate personalized results.

The general recommendations for providing examples are:

- Aim for more than five examples. Even a few dozen would be fine.

- Provide relevant and diverse examples, matching potential users' inputs.

- Specify they are examples. I wrap them in

<example>tags and nest them in<examples>tags.

I think there should be a caveat for the first recommendation. For instance, for using a magic block specifically, if I provide multiple examples with inconsistent styles, the magic block might not be able to generate desirable outputs in my style—likely because there isn't one style.

3. Ask the LLM to think "out loud"

As silly as this might sound, asking the LLM to think before answering gives better results. Again, this is recommended across the board (OpenAI, Anthropic, Google, and industry practitioners).

This can be as simple as adding "Think step-by-step" to the prompt or as complex as specifying the exact steps to think through and asking the LLM to generate its thoughts. I went with the latter:

...

Now, create a magic prompt for the following task.

First, think about the key principles and specific challenges for the task and place them in the <thoughts> tags.

Then come up with a title (no more than 5 words) for the assigned role and enclose it within <title> tags.

Finally, create the magic prompt and enclose it within <prompt> tags.

...

Every's Spiral went as far as creating multiple steps in its app. First, the LLM generates a summary of the patterns from your examples. Then it generates your output by referring to the summary ({{ summaryPatterns }}).

You are an expert article summarizer. Your task is to summarize a {{ inputFormat }} into a {{ outputFormat }} that follows the following style:

{{ summaryPatterns }}

Here are some examples:

{{ examples }}

Here's a {{ outputFormat }} made from a {{ inputFormat }}, please create a {{ outputFormat }} that follows the style above.

{{ newInput }}

Think step-by-step about the main points of the {{ inputFormat }} and write a {{ outputFormat }} that follows the style and structure above. Pay attention to the examples given above and try to match them as closely as possible. Do not include any pre-ambles or post-ambles. Return text answer only, do not wrap answer in any XML tags.

Breaking complex tasks into smaller steps tends to give better results. But that also means calling the API multiple times, which increases the cost and generation time. It also increases the number of steps for users, which could be good or bad, depending on your users' tech-savviness.

4. Have a fallback

I cannot remember where I learned this from (likely Linus Lee) but I think it's a good idea to have a fallback prompt. By default, the LLM would guess the user's intents and generate something from its guess. But the result might not be good enough. I believe that having a fallback prompt makes the app more robust. If the user's prompt is not clear, the fallback prompt will ask the user to refine their prompt.

For example, I appended this to the end of my prompt:

If you do not understand the block description or do not have enough context, please respond with "I don't understand. Please refine your block description." or "I don't have enough context. Please refine your block description."

When I simply entered "random" as an input, I got different results with and without the fallback prompt.

# Without the fallback prompt

You are a Random Content Generator. Your task is to create unique and unpredictable content in the specified format. Generate random text, code, or other outputs without repeating previous responses.

# With the fallback prompt

I don't understand. Please refine your block task.

This shows that the LLM does understand when it doesn't have enough context but would force a guess without the fallback prompt. This creates a non-ideal experience for the user (terrible result and unclear next step). We should use this understanding to guide the user to improve their input, instead of dumping a nonsense output.

Unlike programming, which is deterministic, the LLM might not always catch bad prompts. But from my experience, it seems good enough.

5. Evaluate systematically

Well, by right, I should have created a few test cases and compared how the outputs vary with different prompts. Or maybe even use another LLM to judge.

But honestly, I simply did vibe checks. I tested a few use cases and iterated rather unsystematically until I saw that the outputs were good enough. I realized that the amount of testing would grow exponentially since I was generating a prompt to generate an output. If I tested three prompt-generating prompts and three inputs for each, I would have 9 outputs to evaluate. 5 prompt-generating prompts and five inputs would be 25 outputs.

If you know reasonable and feasible ways I can evaluate my prompts more systematically, please teach me!

What's ahead?

This is just the start of this project, and there are many exciting ideas to explore. For example, would I get better results if I split the magic block into two LLM calls instead of one (one to analyze the examples and one to generate the output based on the result of the first)? How do I process and generate videos? What's the best interface for chaining blocks?

I started this project to scratch my itch to build and satisfy my curiosity about building products with LLMs. It was meant to be a quick experiment to create an open-source repo for people to run locally. But I kept increasing the scope until I had a working product. While there are many more things to build and equally many things to learn about building with LLMs, the current stage felt like a good place to take a step back, reflect, and consolidate my learnings. Hence, this essay.

Honestly, I may or may not continue building Magic Blocks. I may also explore other LLM ideas. I'm not sure. We shall see! But despite that, if you're still interested in pre-ordering a subscription to support me in this project, please feel free to reach out.

Thank you for reading, and happy building!

Thanks, Lim Swee Kiat and Justin Lee, for reading and commenting on my draft.